Knowledge Gain Dashboard

EEG video analytics platform

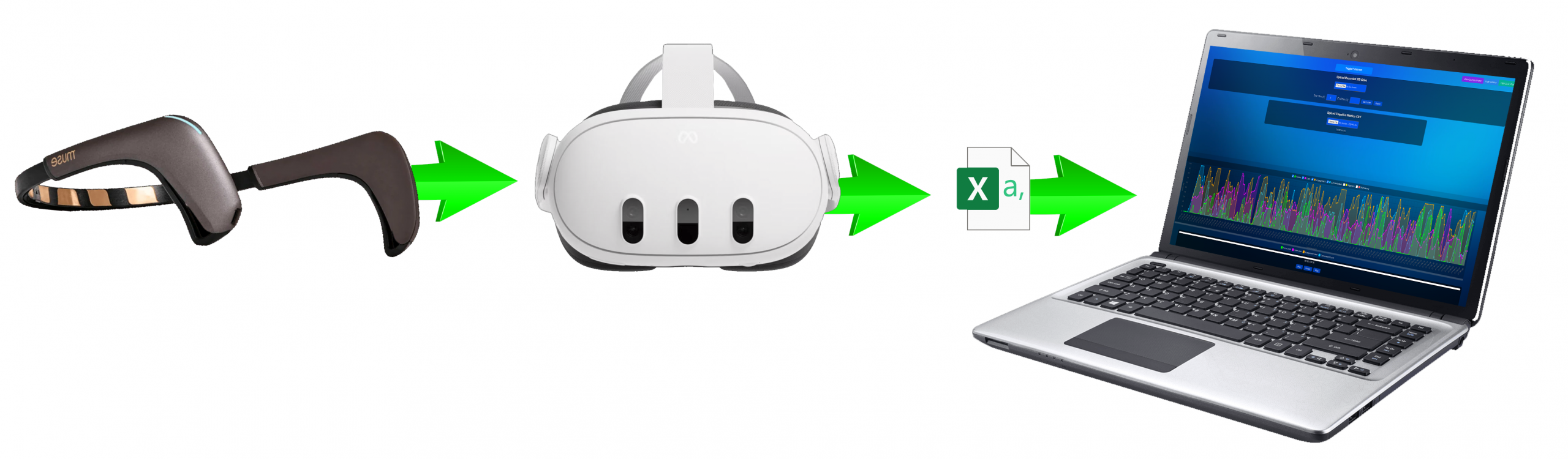

EEG related data flow diagram

AI related data flow diagram

Introduction

Despite advances in educational technology, university teaching remains largely designed for large cohorts and standardised delivery, overlooking the diverse learning needs of individual students. Learners with ADHD or other special educational needs often struggle to engage effectively in such rigid and non-personalised environments.

This raises a critical question: can we design immersive classrooms that adapt to individual cognitive needs?

The COVID-19 pandemic forced universities to rapidly adopt remote teaching at scale, exposing several limitations—impersonal delivery, dependence on devices and internet connectivity, and a lack of real-time personalisation. Additionally, online platforms are poorly suited to demonstrating in-class experiments or practical exercises, which are essential for experiential and active learning.

Current educational content is still predominantly delivered through text and video on flat screens. While this approach is convenient, it fails to support the understanding of complex 3D concepts, particularly in subjects such as anatomy, architecture, or engineering, where spatial reasoning is vital. Learners often struggle to grasp depth, scale, and the relationships between objects when content is limited to two-dimensional formats (Huang & Tseng, 2025).

Immersive learning environments offer a promising solution by providing interactive and spatially rich experiences. However, these systems are often too complex and lack personalised guidance and real-time feedback. This leads to disengagement and suboptimal learning outcomes. To address these challenges, conversational AI and neurofeedback can be integrated to enhance learning, enabling real-time adaptation to the learner’s cognitive and emotional state (González-Erena, Fernández-Guinea, & Kourtesis, 2024).

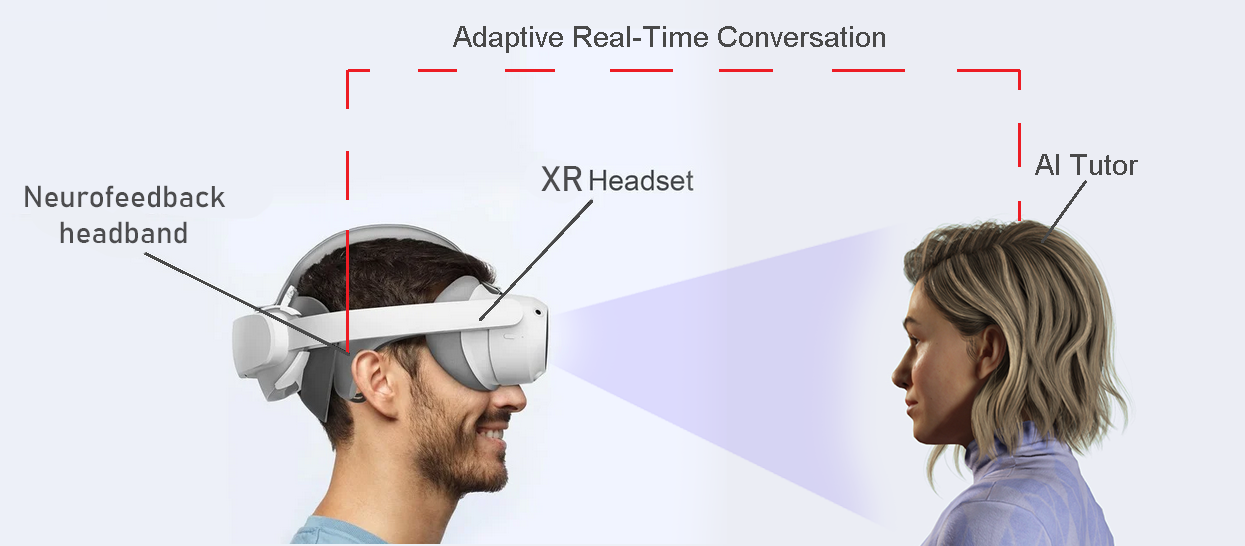

This PhD research proposes an adaptive learning framework within Mixed Reality (MR) environments, integrating an AI-enhanced virtual tutor and an exploratory neurofeedback component. The neurofeedback, collected via a brain–computer interface (BCI), is intended as an investigation into whether real-time neurological data can be used to inform and enhance the design of learning systems in immersive environments (see Figure 1). Together, these technologies are capable of creating an interactive one-to-one learning experience tailored to the learner’s individual profile. By continuously monitoring the user’s conversation with the AI tutor, alongside cognitive engagement through neurofeedback, the system can provide adaptive support to learners, with the aim of improving engagement, retention, and overall learning outcomes in immersive educational settings.

To facilitate this investigation, several immersive environments will serve as research instruments. They will provide crucial insights into how the integration of these technologies can shape the future of personalised education, exploring their potential for real-world applications and demonstrating their effectiveness.

Aims / Objectives

The aim of this PhD research is to design, implement, and evaluate an adaptive extended reality (XR) learning environment that integrates conversational AI, with the goal of enhancing learner engagement and educational outcomes in higher education.

This research will also compare different XR environments to determine their relative effectiveness in supporting learning. As a secondary aim, the research will investigate the feasibility of integrating neurofeedback to improve forward adaptability.

The proposed research is guided by the following objectives:

- To identify learner needs, limitations of current virtual learning systems, and design requirements for effective XR-based learning through qualitative interviews and quantitative surveys with university students.

- To develop prototype XR learning environments — a baseline non-adaptive version and an conversational AI -driven adaptive version — based on insights from the data collection.

- To evaluate the effectiveness of conversational AI tutors in XR environments by comparing learning outcomes between the static XR prototype and the AI-enhanced version.

- Investigate the feasibility of neurofeedback-supported AI tutors on learner engagement and performance compared to AI-only XR environments.

- To contribute evidence-based design recommendations for the future development of adaptive XR learning systems that leverage conversational AI and neurofeedback.

Design

The study aims to investigate the effect of adaptive technologies in extended reality (XR) classrooms on students’ knowledge retention. A quantitative, between-subjects design will be used. The independent variable is the learning condition, with three levels: XR alone, XR with an AI assistant, XR with an AI assistant combined with neurofeedback.

- The primary dependent variable (DV) is knowledge retention, measured by improvement from a pre-test to a post-test quiz administered in each session/ phase.

- The secondary dependent variable is AI interaction, measured using interaction logs and screen recordings in phases 2 and 3.

- In addition, engagement level and cognitive load will be measured experimentally across all conditions to explore its relationship with knowledge retention. If a positive relationship is observed (i.e., higher engagement is associated with better test scores), the engagement index formula will be used to implement adaptive neurofeedback support in the third phase. In this condition, if engagement drops below a threshold, the AI interaction dynamically adapts (e.g., simplified explanations are provided) to reinforce learning. The cognitive load detection is intended to optimise XR classrooms across the three phases.